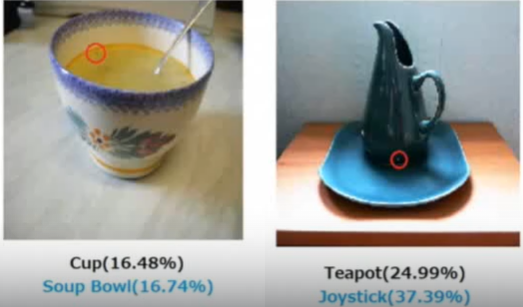

В [1 и 2] приводится пример, в котором после незначительного изменения изображения (рис. 1 и 2) нейронная сеть (НС) GoogLeNet, обученная на ImageNet [3], начинает неверно классифицировать измененное изображение, хотя визуально оно практически неотличимо от исходного.

Рис. 1. Исходное (слева) и измененное изображения

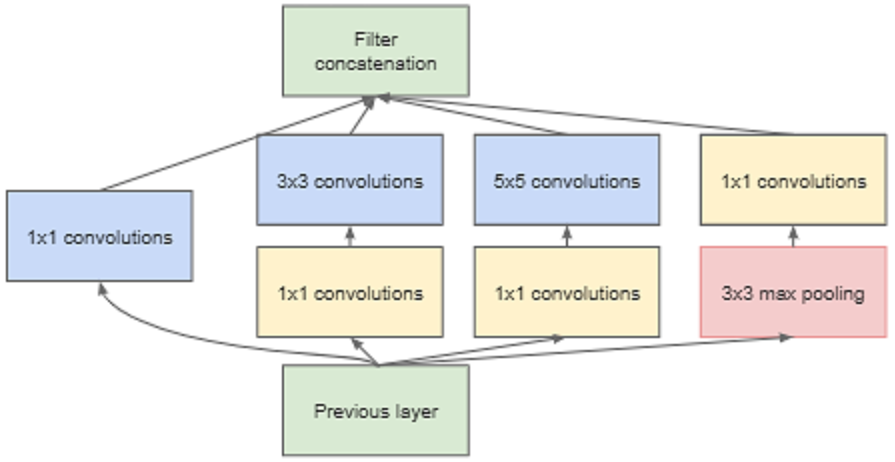

Иное название GoogLeNet – Inception V1 [4]. Эта НС (рис. 2 и 3) включает начальные модули (inception modules).

Рис. 2. Начальный модуль GoogLeNet.

Рис. 3. Начальный модуль GoogLeNet с уменьшением размерности.

Подобные изменения приводят в качестве примеров успешной атаки на НС.

В [2] показан пример успешной атаки на НС AlexNet [5], так же обученной на ImageNet: после изменения одного пикселя изображения эта НС меняет свой прогноз (рис .4).

Рис. 4. Однопиксельные атаки на AlexNet

Атака НС, обученных на ImageNet (разд. 2), выполняется с применением изображения, показанного на рис. 5 справа.

Рис. 5. Исходное и атакующее изображения

Такие атаки, однако, эффективные для одной НС проходят совершенно бесследно для НС с другой архитектурой.

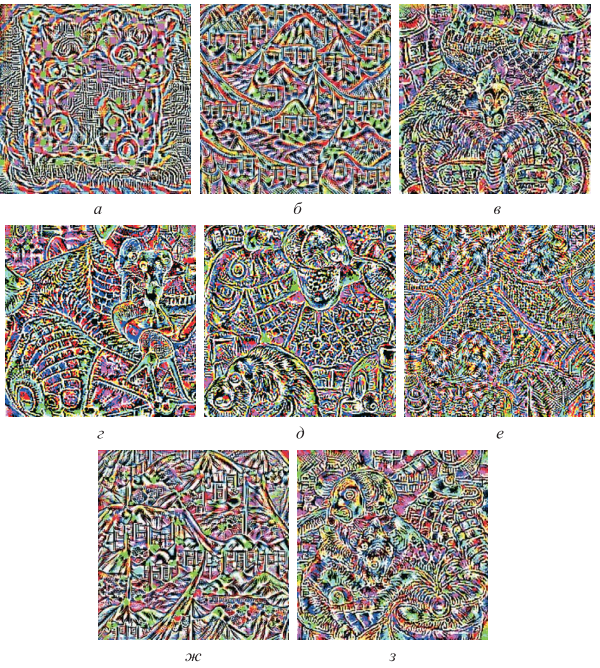

Один из способов сделать атаки более универсальными – это применение атакующих шумов [6]. Примеры таких шумов, взятые из [6], приведены на рис. 6.

Рис. 6. Примеры атакующих шумов:

а – AlexNet; б – VGG19; в – ResNet152; г – DenseNet; д – GoogLeNet; е – MobileNet; ж – VGG11; з – ResNet18.

Визуально исходное и зашумленные изображения малоразличимы, но точность классификации зашумленных изображений существенно ниже, чем исходных [6].

Пример употребления шума при подготовке атаки удачной на НС AlexNet [7] показан на рис. 7.

Рис. 7. Пример употребления шума для атаки удачной на AlexNet

Проверяется, насколько токсичны зашумленные изображения (табл. 1), приведенные на рис. 1, 5 и 7 для следующих обученных на ImageNet НС:

Прогноз НС выводит программа, приведенная в прил.

Таблица 1. Прогнозы НС

| НС | Прогнозы для изображений | |||

|---|---|---|---|---|

| Исходное | Зашумленное | |||

| Прогноз | Вес | Прогноз | Вес | |

| Панда | ||||

| Xception | giant_panda | 0.8934532 | giant_panda | 0.8685231 |

| indri | 0.006895099 | indri | 0.012549218 | |

| VGG16 | giant_panda | 0.29531986 | giant_panda | 0.49855888 |

| gibbon | 0.20835872 | toy_poodle | 0.12609173 | |

| VGG19 | giant_panda | 0.9475259 | giant_panda | 0.85687333 |

| gibbon | 0.01658522 | indri | 0.07732392 | |

| ResNet50 | giant_panda | 0.99900997 | giant_panda | 0.9984341 |

| indri | 0.0004465563 | indri | 0.00094144914 | |

| ResNet101 | giant_panda | 0.9800418 | giant_panda | 0.928511 |

| toy_poodle | 0.004540715 | indri | 0.030445982 | |

| ResNet152 | giant_panda | 0.9794937 | giant_panda | 0.98116606 |

| indri | 0.019208707 | indri | 0.017197827 | |

| ResNet50V2 | giant_panda | 0.9999914 | giant_panda | 0.999948 |

| indri | 5.141379e-06 | indri | 2.7761647e-05 | |

| ResNet101V2 | giant_panda | 0.9999256 | giant_panda | 0.99954647 |

| indri | 7.1417715e-05 | indri | 0.0004399426 | |

| ResNet152V2 | giant_panda | 0.99737144 | giant_panda | 0.9877182 |

| indri | 0.0019275275 | indri | 0.010348079 | |

| InceptionV3 | giant_panda | 0.78640085 | giant_panda | 0.69644904 |

| indri | 0.0059863715 | indri | 0.009347004 | |

| InceptionResNetV2 | giant_panda | 0.87451833 | giant_panda | 0.88990486 |

| indri | 0.0035678335 | indri | 0.0034128423 | |

| MobileNet | giant_panda | 0.9963903 | giant_panda | 0.9833499 |

| indri | 0.00154353 | indri | 0.0147644915 | |

| MobileNetV2 | giant_panda | 0.91432685 | giant_panda | 0.9175715 |

| indri | 0.004121284 | indri | 0.0028103911 | |

| DenseNet121 | giant_panda | 0.6411652 | giant_panda | 0.35457098 |

| gibbon | 0.16831161 | gibbon | 0.27123645 | |

| DenseNet169 | giant_panda | 0.99348277 | giant_panda | 0.9934942 |

| gibbon | 0.0014070828 | indri | 0.0043741534 | |

| DenseNet201 | giant_panda | 0.86581695 | giant_panda | 0.89746433 |

| indri | 0.03975411 | indri | 0.031577703 | |

| NASNetMobile | giant_panda | 0.9232004 | giant_panda | 0.91260135 |

| indri | 0.001689267 | indri | 0.0022836388 | |

| NASNetLarge | giant_panda | 0.9162965 | giant_panda | 0.910803 |

| lesser_panda | 0.0013859468 | lesser_panda | 0.0012689099 | |

| ConvNeXtTiny | giant_panda | 0.83502537 | giant_panda | 0.81756246 |

| indri | 0.004105052 | indri | 0.014787479 | |

| ConvNeXtSmall | giant_panda | 0.8786456 | giant_panda | 0.8769419 |

| indri | 0.0034498249 | indri | 0.004605325 | |

| ConvNeXtLarge | giant_panda | 0.9321051 | giant_panda | 0.932334 |

| lesser_panda | 0.0033918915 | lesser_panda | 0.002519659 | |

| ConvNeXtXLarge | giant_panda | 0.95751643 | giant_panda | 0.95650977 |

| lesser_panda | 0.0048588356 | lesser_panda | 0.005052445 | |

| EfficientNetB0 | giant_panda | 0.7300266 | giant_panda | 0.6690231 |

| indri | 0.032585632 | indri | 0.029188804 | |

| EfficientNetB1 | giant_panda | 0.950511 | giant_panda | 0.9585726 |

| gibbon | 0.005032844 | lesser_panda | 0.0034036357 | |

| EfficientNetB2 | giant_panda | 0.54397595 | giant_panda | 0.8525239 |

| lesser_panda | 0.0942573 | lesser_panda | 0.010274735 | |

| EfficientNetB3 | giant_panda | 0.5946984 | giant_panda | 0.71859056 |

| indri | 0.06545867 | indri | 0.055300258 | |

| EfficientNetB4 | giant_panda | 0.6508244 | giant_panda | 0.6376153 |

| gibbon | 0.025846625 | gibbon | 0.020103889 | |

| EfficientNetB5 | giant_panda | 0.81070524 | giant_panda | 0.79697144 |

| lesser_panda | 0.008972366 | indri | 0.014907344 | |

| EfficientNetB6 | giant_panda | 0.83647615 | giant_panda | 0.82969284 |

| indri | 0.009402118 | indri | 0.015815089 | |

| EfficientNetB7 | giant_panda | 0.8115224 | giant_panda | 0.80586946 |

| lesser_panda | 0.0040551303 | lesser_panda | 0.002662837 | |

| EfficientNetV2B0 | giant_panda | 0.8198102 | giant_panda | 0.78598225 |

| lesser_panda | 0.006843959 | indri | 0.007068597 | |

| EfficientNetV2B1 | giant_panda | 0.905874 | giant_panda | 0.87956053 |

| lesser_panda | 0.010039191 | indri | 0.010512123 | |

| EfficientNetV2B2 | giant_panda | 0.87766695 | giant_panda | 0.8547733 |

| indri | 0.0064418796 | indri | 0.014298057 | |

| EfficientNetV2B3 | giant_panda | 0.9379393 | giant_panda | 0.9322769 |

| lesser_panda | 0.0024651065 | lesser_panda | 0.0024217644 | |

| EfficientNetV2S | giant_panda | 0.870769 | giant_panda | 0.84205574 |

| indri | 0.007501943 | indri | 0.016715052 | |

| EfficientNetV2M | giant_panda | 0.72141683 | giant_panda | 0.6123171 |

| indri | 0.03916026 | indri | 0.09327198 | |

| EfficientNetV2L | giant_panda | 0.8231075 | giant_panda | 0.7771141 |

| indri | 0.007467236 | indri | 0.016257575 | |

| Чаша с бульоном | ||||

| Xception | consomme | 0.4769622 | eggnog | 0.26429173 |

| eggnog | 0.369737 | consomme | 0.20811778 | |

| VGG16 | espresso | 0.29913118 | eggnog | 0.4284617 |

| eggnog | 0.28693104 | espresso | 0.09198572 | |

| VGG19 | eggnog | 0.40479746 | eggnog | 0.55078036 |

| espresso | 0.14222756 | candle | 0.13904397 | |

| ResNet50 | eggnog | 0.610125 | eggnog | 0.73905134 |

| consomme | 0.19917005 | candle | 0.1121889 | |

| ResNet101 | eggnog | 0.44710666 | candle | 0.9581977 |

| espresso | 0.26310477 | eggnog | 0.023749026 | |

| ResNet152 | eggnog | 0.49946448 | candle | 0.5919975 |

| consomme | 0.29502374 | eggnog | 0.37803417 | |

| ResNet50V2 | consomme | 0.7435905 | consomme | 0.55259335 |

| espresso | 0.09107772 | candle | 0.2439611 | |

| ResNet101V2 | eggnog | 0.69917256 | eggnog | 0.42238367 |

| espresso | 0.17949456 | consomme | 0.1465741 | |

| ResNet152V2 | consomme | 0.56592834 | consomme | 0.42126563 |

| eggnog | 0.35111922 | eggnog | 0.28072673 | |

| InceptionV3 | eggnog | 0.8450097 | eggnog | 0.8930665 |

| consomme | 0.10611778 | consomme | 0.046935618 | |

| InceptionResNetV2 | eggnog | 0.61004436 | eggnog | 0.8671397 |

| consomme | 0.25859842 | consomme | 0.059002116 | |

| MobileNet | eggnog | 0.3772939 | consomme | 0.36373577 |

| consomme | 0.3723965 | candle | 0.30248508 | |

| MobileNetV2 | eggnog | 0.74664634 | eggnog | 0.818449 |

| consomme | 0.19889948 | consomme | 0.110172115 | |

| DenseNet121 | eggnog | 0.6716637 | eggnog | 0.7523846 |

| consomme | 0.15843609 | consomme | 0.04910938 | |

| DenseNet169 | eggnog | 0.6745572 | eggnog | 0.80882406 |

| consomme | 0.21082787 | consomme | 0.08539948 | |

| DenseNet201 | eggnog | 0.5806987 | eggnog | 0.5637367 |

| consomme | 0.37859577 | consomme | 0.22350387 | |

| NASNetMobile | consomme | 0.37425083 | consomme | 0.5366754 |

| eggnog | 0.28989267 | eggnog | 0.1794022 | |

| NASNetLarge | eggnog | 0.8903488 | eggnog | 0.9094321 |

| cup | 0.0048674275 | consomme | 0.0049209073 | |

| ConvNeXtTiny | eggnog | 0.77363735 | eggnog | 0.8174946 |

| soup_bowl | 0.05341066 | cup | 0.032327835 | |

| ConvNeXtSmall | eggnog | 0.7953768 | eggnog | 0.71390885 |

| consomme | 0.046404447 | cup | 0.068947665 | |

| ConvNeXtLarge | eggnog | 0.64079994 | eggnog | 0.4498356 |

| soup_bowl | 0.13861178 | consomme | 0.15918107 | |

| ConvNeXtXLarge | eggnog | 0.6320851 | consomme | 0.36625546 |

| consomme | 0.19811822 | eggnog | 0.31976825 | |

| EfficientNetB0 | eggnog | 0.3809768 | eggnog | 0.6290149 |

| consomme | 0.14101312 | consomme | 0.078249924 | |

| EfficientNetB1 | eggnog | 0.60376394 | eggnog | 0.41322622 |

| consomme | 0.11916207 | candle | 0.3276879 | |

| EfficientNetB2 | eggnog | 0.34833598 | eggnog | 0.44046506 |

| espresso | 0.16403706 | espresso | 0.10790814 | |

| EfficientNetB3 | eggnog | 0.28097656 | eggnog | 0.6935557 |

| soup_bowl | 0.23056771 | consomme | 0.094831824 | |

| EfficientNetB4 | eggnog | 0.4320463 | eggnog | 0.33854678 |

| consomme | 0.21700177 | consomme | 0.2933674 | |

| EfficientNetB5 | eggnog | 0.27524996 | eggnog | 0.6045266 |

| consomme | 0.2478749 | consomme | 0.17794715 | |

| EfficientNetB6 | eggnog | 0.27092734 | eggnog | 0.47768772 |

| consomme | 0.1779286 | consomme | 0.18869795 | |

| EfficientNetB7 | consomme | 0.25540447 | eggnog | 0.44616348 |

| soup_bowl | 0.18963791 | consomme | 0.304498 | |

| EfficientNetV2B0 | eggnog | 0.7116632 | eggnog | 0.7732018 |

| consomme | 0.13334379 | consomme | 0.057252146 | |

| EfficientNetV2B1 | eggnog | 0.62781626 | eggnog | 0.64746225 |

| consomme | 0.10121088 | candle | 0.16391389 | |

| EfficientNetV2B2 | eggnog | 0.24424511 | eggnog | 0.28804588 |

| soup_bowl | 0.19228119 | cup | 0.17587207 | |

| EfficientNetV2B3 | eggnog | 0.5526559 | eggnog | 0.6179782 |

| consomme | 0.16055174 | consomme | 0.13450757 | |

| EfficientNetV2S | eggnog | 0.78391105 | eggnog | 0.52456164 |

| consomme | 0.05584524 | consomme | 0.22690299 | |

| EfficientNetV2M | eggnog | 0.64184594 | eggnog | 0.44421917 |

| consomme | 0.07962157 | cup | 0.031200623 | |

| EfficientNetV2L | eggnog | 0.46971226 | eggnog | 0.5862437 |

| consomme | 0.24500662 | consomme | 0.08160363 | |

| Птица (rbs – red-backed_sandpiper) | ||||

| Xception | rbs | 0.9373782 | rbs | 0.9421061 |

| dowitcher | 0.0009936722 | dowitcher | 0.006671094 | |

| VGG16 | rbs | 0.99296546 | rbs | 0.95829177 |

| dowitcher | 0.0065512755 | dowitcher | 0.034031138 | |

| VGG19 | rbs | 0.98258233 | rbs | 0.76117235 |

| dowitcher | 0.015454282 | brambling | 0.1649745 | |

| ResNet50 | rbs | 0.9945057 | rbs | 0.8463953 |

| dowitcher | 0.004733121 | dowitcher | 0.11212878 | |

| ResNet101 | rbs | 0.99510777 | rbs | 0.70046127 |

| dowitcher | 0.003608791 | dowitcher | 0.26891673 | |

| ResNet152 | rbs | 0.9982114 | rbs | 0.97655004 |

| dowitcher | 0.0013238922 | dowitcher | 0.014266697 | |

| ResNet50V2 | rbs | 0.9906026 | rbs | 0.98387384 |

| dowitcher | 0.008500421 | dowitcher | 0.014791488 | |

| ResNet101V2 | rbs | 0.9997391 | rbs | 0.9987062 |

| dowitcher | 0.00020983515 | dowitcher | 0.0012432294 | |

| ResNet152V2 | rbs | 0.9999851 | rbs | 0.9999176 |

| dowitcher | 1.9361084e-06 | dowitcher | 5.1032715e-05 | |

| InceptionV3 | rbs | 0.92500746 | rbs | 0.9272541 |

| dowitcher | 0.00485395 | dowitcher | 0.017696658 | |

| InceptionResNetV2 | rbs | 0.9272363 | rbs | 0.93487924 |

| dowitcher | 0.0016747853 | dowitcher | 0.002409931 | |

| MobileNet | rbs | 0.97926563 | rbs | 0.97908795 |

| dowitcher | 0.018759424 | dowitcher | 0.011371828 | |

| MobileNetV2 | rbs | 0.8973367 | rbs | 0.72216326 |

| ruddy_turnstone | 0.015419056 | dowitcher | 0.09924709 | |

| DenseNet121 | rbs | 0.97185004 | rbs | 0.65919155 |

| dowitcher | 0.026844697 | dowitcher | 0.32818854 | |

| DenseNet169 | rbs | 0.9965913 | rbs | 0.8583146 |

| dowitcher | 0.0029490215 | dowitcher | 0.13460773 | |

| DenseNet201 | rbs | 0.994795 | rbs | 0.98502433 |

| dowitcher | 0.0028738263 | dowitcher | 0.009322146 | |

| NASNetMobile | rbs | 0.92656285 | rbs | 0.87028766 |

| dowitcher | 0.0076086614 | dowitcher | 0.022089344 | |

| NASNetLarge | rbs | 0.9210256 | rbs | 0.8988672 |

| dowitcher | 0.0018086635 | dowitcher | 0.016565517 | |

| ConvNeXtTiny | rbs | 0.8871051 | rbs | 0.80185235 |

| dowitcher | 0.004807195 | dowitcher | 0.0050164973 | |

| ConvNeXtSmall | rbs | 0.90524435 | rbs | 0.8725227 |

| dowitcher | 0.0026886899 | crossword_puzzle | 0.006224967 | |

| ConvNeXtSmall | rbs | 0.90524435 | rbs | 0.8725227 |

| dowitcher | 0.0026886899 | crossword_puzzle | 0.006224967 | |

| ConvNeXtLarge | rbs | 0.9365929 | rbs | 0.9214403 |

| dowitcher | 0.00709174 | dowitcher | 0.009951768 | |

| ConvNeXtXLarge | rbs | 0.9616423 | rbs | 0.9498634 |

| dowitcher | 0.0026674685 | dowitcher | 0.005683166 | |

| EfficientNetB0 | rbs | 0.9329818 | rbs | 0.9495701 |

| ruddy_turnstone | 0.006443314 | dowitcher | 0.015777139 | |

| EfficientNetB1 | rbs | 0.9059463 | rbs | 0.90166867 |

| dowitcher | 0.0063025053 | dowitcher | 0.011085955 | |

| EfficientNetB2 | rbs | 0.9058235 | rbs | 0.88913435 |

| dowitcher | 0.0013199622 | dowitcher | 0.013959255 | |

| EfficientNetB3 | rbs | 0.9134904 | rbs | 0.9149238 |

| dowitcher | 0.0019892226 | dowitcher | 0.0055353707 | |

| EfficientNetB4 | rbs | 0.75999826 | rbs | 0.7595083 |

| dowitcher | 0.0016400524 | dowitcher | 0.00415613 | |

| EfficientNetB5 | rbs | 0.86962 | rbs | 0.858953 |

| dowitcher | 0.0044280756 | dowitcher | 0.010272025 | |

| EfficientNetB6 | rbs | 0.7415047 | rbs | 0.77854013 |

| dowitcher | 0.004406076 | dowitcher | 0.007087492 | |

| EfficientNetB7 | rbs | 0.80603844 | rbs | 0.7811539 |

| dowitcher | 0.005759541 | dowitcher | 0.008795305 | |

| EfficientNetV2B0 | rbs | 0.9352422 | rbs | 0.9352263 |

| ruddy_turnstone | 0.0026096187 | dowitcher | 0.0038579428 | |

| EfficientNetV2B1 | rbs | 0.94026804 | rbs | 0.94414705 |

| dowitcher | 0.006028206 | dowitcher | 0.012713395 | |

| EfficientNetV2B2 | rbs | 0.93339777 | rbs | 0.9421321 |

| dowitcher | 0.0029742066 | dowitcher | 0.006107013 | |

| EfficientNetV2B3 | rbs | 0.9359722 | rbs | 0.92271745 |

| dowitcher | 0.001212289 | dowitcher | 0.0030449282 | |

| EfficientNetV2S | rbs | 0.8817812 | rbs | 0.89386517 |

| dowitcher | 0.0042605316 | dowitcher | 0.006788064 | |

| EfficientNetV2M | rbs | 0.70686203 | rbs | 0.4808183 |

| dowitcher | 0.009289558 | dowitcher | 0.011369884 | |

| EfficientNetV2L | rbs | 0.6183409 | rbs | 0.68018055 |

| dowitcher | 0.011672224 | dowitcher | 0.013342938 | |

Замечание. В табл. 1 использовано сокращение: rbs – red-backed_sandpiper.

Приведенный выше код, если параметр get_cls = 0, получает значения следующих показателей различия/сходства изображений:

Таблица 2. Оценки различия изображений (исходного и зашумленного)

| Показатель | Различие |

|---|---|

| Панда, рис. 1 | |

| MSE | 0.001933 |

| PSNR | -27.13794 |

| 1 - SSIM | 0.066128 |

| E_dist | 17.05736 |

| Cos_dist | 0.003859 |

| Чаша с бульоном, рис. 5 | |

| MSE | 0.002427 |

| PSNR | -26.149346 |

| 1 - SSIM | 0.024277 |

| E_dist | 19.11353 |

| Cos_dist | 0.003058 |

| Птица, рис. 7 | |

| MSE | 0.003869 |

| PSNR | -24.124062 |

| 1 - SSIM | 0.203142 |

| E_dist | 24.132683 |

| Cos_dist | 0.007075 |

Прогноз НС выводит следующий код:

from sys import exit

import numpy as np

from keras.preprocessing import image

import matplotlib.pyplot as plt

get_cls = 1

mdl_no = 0

mdl_lst = ['Xception', 'VGG16', 'VGG19', 'ResNet50', 'ResNet101', 'ResNet152', # 0 - 5

'ResNet50V2', 'ResNet101V2', 'ResNet152V2', 'InceptionV3', # 6 - 9

'InceptionResNetV2', 'MobileNet', 'MobileNetV2', # 10 - 12

'MobileNetV3Small', 'MobileNetV3Large', # 13 - 14

'DenseNet121', 'DenseNet169', 'DenseNet201', # 15 - 17

'NASNetMobile', 'NASNetLarge', 'ConvNeXtTiny', 'ConvNeXtSmall', # 18 - 21

'ConvNeXtBase', 'ConvNeXtLarge', 'ConvNeXtXLarge', # 22 - 24

'EfficientNetB0', 'EfficientNetB1', 'EfficientNetB2', # 25 - 27

'EfficientNetB3', 'EfficientNetB4', 'EfficientNetB5', # 28 - 30

'EfficientNetB6', 'EfficientNetB7', 'EfficientNetV2B0', # 31 - 33

'EfficientNetV2B1', 'EfficientNetV2B2', 'EfficientNetV2B3', # 34 - 36

'EfficientNetV2S', 'EfficientNetV2M', 'EfficientNetV2L'] # 37 - 39

show_summary = 0

show_img = 0

img_nm1 = 'panda_true.png'

img_nm2 = 'panda_noise.png'

img_nm1 = 'cup_true.png' # cup.png, teapot.png, as_cheetah.png

img_nm2 = 'cup_hole.png'

# img_nm1 = 'bird_true.png' # Песочник с красной спинкой

# img_nm2 = 'bird_noise.png' # или американский бекасовидный веретенник

imgs_list = [img_nm1, img_nm2]

# imgs_list = ['elephant.jpg', 'wolf.jpg', 'amur_tiger.jpg']

imgs_loaded = []

def show_pics(imgs_list, imgs_loaded):

n_imgs = len(imgs_list)

_, axs = plt.subplots(1, n_imgs, figsize = (7, 4))

for i in range(n_imgs):

ax1 = axs[i]

ax1.set_axis_off()

ax1.set_title(imgs_list[i])

ax1.imshow(imgs_loaded[i])

plt.show()

if get_cls == 0:

from skimage.metrics import mean_squared_error

import cv2, scipy

from skimage.metrics import structural_similarity as ssim

from scipy.spatial.distance import euclidean as e_dist

for img_nm in imgs_list:

img = image.load_img(img_nm, target_size = (224, 224))

img = image.img_to_array(img) # Массив формы (224, 224, 3)

img /= 255

imgs_loaded.append(img)

if show_img:

show_pics(imgs_list, imgs_loaded)

# Средняя квадратическая ошибка

image1 = imgs_loaded[0]

image2 = imgs_loaded[1]

mse = mean_squared_error(image1, image2)

# mse2 = ((image1 - image2) ** 2).mean()

# Пиковое отношение сигнала к шуму

# https://habr.com/ru/articles/126848/

psnr = cv2.PSNR(image1, image2, mse)

# Индекс структурного сходства

# https://habr.com/ru/articles/126848/

# https://scikit-image.org/docs/stable/auto_examples/transform/plot_ssim.html

# score_ssim, diff = ssim(image1, image2, full = True, multichannel = True)

# или (в зависимости от версии)

dr1 = image1.max() - image1.min()

dr2 = image2.max() - image2.min()

dr = max(dr1, dr2)

# score_ssim, diff = ssim(image1, image2, full = True, channel_axis = 2, data_range = dr)

score_ssim2, diff = ssim(image1.flatten(), image2.flatten(), full = True, data_range = dr)

# Евклидово расстояние

ec_dst = np.linalg.norm(image1 - image2)

# ec_dst2 = e_dist(image1.flatten(), image2.flatten())

# Косинусное расстояние между векторами

# https://docs.scipy.org/doc/scipy/reference/generated/scipy.spatial.distance.cosine.html

cs_dst = scipy.spatial.distance.cosine(image1.flatten(), image2.flatten())

print('mse =', round(mse, 6))

# print('mse2 =', round(mse2, 6))

print('psnr =', round(psnr, 6))

# print('1 - ssim =', round(1 - score_ssim, 6))

print('1 - ssim2 =', round(1 - score_ssim2, 6))

print('ec_dst =', round(ec_dst, 6))

# print('ec_dst2 =', round(ec_dst2, 6))

print('cs_dst =', round(cs_dst, 6))

else:

from google.colab import drive

drv = '/content/drive/'

drive.mount(drv)

# Путь к изображениям в Colab

pathToData = drv + 'My Drive/imgs/'

for mdl_no in range(len(mdl_lst)):

print('mdl_name =', mdl_lst[mdl_no])

target_size = (224, 224)

if mdl_no in [13, 14, 22]: continue

if mdl_no == 0: # Создание модели нейронной сети

from keras.applications.xception import Xception as img_net

from keras.applications.xception import preprocess_input as pri

from keras.applications.xception import decode_predictions as dep

target_size = (299, 299)

elif mdl_no == 1:

from keras.applications.vgg16 import VGG16 as img_net

from keras.applications.vgg16 import preprocess_input as pri

from keras.applications.vgg16 import decode_predictions as dep

elif mdl_no == 2:

from keras.applications.vgg19 import VGG19 as img_net

from keras.applications.vgg19 import preprocess_input as pri

from keras.applications.vgg19 import decode_predictions as dep

elif mdl_no in [3, 4, 5]:

if mdl_no == 3:

from keras.applications.resnet import ResNet50 as img_net

elif mdl_no == 4:

from keras.applications.resnet import ResNet101 as img_net

elif mdl_no == 5:

from keras.applications.resnet import ResNet152 as img_net

from keras.applications.resnet import preprocess_input as pri

from keras.applications.resnet import decode_predictions as dep

elif mdl_no in [6, 7, 8]:

if mdl_no == 6:

from keras.applications.resnet_v2 import ResNet50V2 as img_net

elif mdl_no == 7:

from keras.applications.resnet_v2 import ResNet101V2 as img_net

elif mdl_no == 8:

from keras.applications.resnet_v2 import ResNet152V2 as img_net

from keras.applications.resnet_v2 import preprocess_input as pri

from keras.applications.resnet_v2 import decode_predictions as dep

elif mdl_no == 9:

from keras.applications.inception_v3 import InceptionV3 as img_net

from keras.applications.inception_v3 import preprocess_input as pri

from keras.applications.inception_v3 import decode_predictions as dep

target_size = (299, 299)

elif mdl_no == 10:

from keras.applications.inception_resnet_v2 import InceptionResNetV2 as img_net

from keras.applications.inception_resnet_v2 import preprocess_input as pri

from keras.applications.inception_resnet_v2 import decode_predictions as dep

target_size = (299, 299)

elif mdl_no == 11:

from keras.applications.mobilenet import MobileNet as img_net

from keras.applications.mobilenet import preprocess_input as pri

from keras.applications.mobilenet import decode_predictions as dep

elif mdl_no == 12:

from keras.applications.mobilenet_v2 import MobileNetV2 as img_net

from keras.applications.mobilenet_v2 import preprocess_input as pri

from keras.applications.mobilenet_v2 import decode_predictions as dep

elif mdl_no in [13, 14]:

if mdl_no == 13:

from keras.applications.mobilenet_v3 import MobileNetV3Small as img_net

elif mdl_no == 14:

from keras.applications.mobilenet_v3 import MobileNetV3Large as img_net

from keras.applications.mobilenet_v3 import preprocess_input as pri

from keras.applications.mobilenet_v3 import decode_predictions as dep

elif mdl_no in [15, 16, 17]:

if mdl_no == 15:

from keras.applications.densenet import DenseNet121 as img_net

elif mdl_no == 16:

from keras.applications.densenet import DenseNet169 as img_net

elif mdl_no == 17:

from keras.applications.densenet import DenseNet201 as img_net

from keras.applications.densenet import preprocess_input as pri

from keras.applications.densenet import decode_predictions as dep

elif mdl_no in [18, 19]:

if mdl_no == 18:

from keras.applications.nasnet import NASNetMobile as img_net

elif mdl_no == 19:

from keras.applications.nasnet import NASNetLarge as img_net

target_size = (331, 331)

from keras.applications.nasnet import preprocess_input as pri

from keras.applications.nasnet import decode_predictions as dep

elif mdl_no in [20, 21, 23, 24]:

if mdl_no == 20:

from keras.applications.convnext import ConvNeXtTiny as img_net

elif mdl_no == 21:

from keras.applications.convnext import ConvNeXtSmall as img_net

elif mdl_no == 22:

from keras.applications.convnext import ConvNeXtBase as img_net

elif mdl_no == 23:

from keras.applications.convnext import ConvNeXtLarge as img_net

elif mdl_no == 24:

from keras.applications.convnext import ConvNeXtXLarge as img_net

from keras.applications.convnext import preprocess_input as pri

from keras.applications.convnext import decode_predictions as dep

elif mdl_no in [25, 26, 27, 28, 29, 30, 31, 32]: # efficientnet

if mdl_no == 25:

from keras.applications.efficientnet import EfficientNetB0 as img_net

elif mdl_no == 26:

from keras.applications.efficientnet import EfficientNetB1 as img_net

target_size = (240, 240)

elif mdl_no == 27:

from keras.applications.efficientnet import EfficientNetB2 as img_net

target_size = (260, 260)

elif mdl_no == 28:

from keras.applications.efficientnet import EfficientNetB3 as img_net

target_size = (300, 300)

elif mdl_no == 29:

from keras.applications.efficientnet import EfficientNetB4 as img_net

target_size = (380, 380)

elif mdl_no == 30:

from keras.applications.efficientnet import EfficientNetB5 as img_net

target_size = (456, 456)

elif mdl_no == 31:

from keras.applications.efficientnet import EfficientNetB6 as img_net

target_size = (528, 528)

elif mdl_no == 32:

from keras.applications.efficientnet import EfficientNetB7 as img_net

target_size = (600, 600)

from keras.applications.efficientnet import preprocess_input as pri

from keras.applications.efficientnet import decode_predictions as dep

elif mdl_no in [33, 34, 35, 36, 37, 38, 39]: # efficientnet_v2

if mdl_no == 33:

from keras.applications.efficientnet_v2 import EfficientNetV2B0 as img_net

elif mdl_no == 34:

from keras.applications.efficientnet_v2 import EfficientNetV2B1 as img_net

target_size = (240, 240)

elif mdl_no == 35:

from keras.applications.efficientnet_v2 import EfficientNetV2B2 as img_net

target_size = (260, 260)

elif mdl_no == 36:

from keras.applications.efficientnet_v2 import EfficientNetV2B3 as img_net

target_size = (300, 300)

elif mdl_no == 37:

from keras.applications.efficientnet_v2 import EfficientNetV2S as img_net

target_size = (384, 384)

elif mdl_no == 38:

from keras.applications.efficientnet_v2 import EfficientNetV2M as img_net

target_size = (480, 480)

elif mdl_no == 39:

from keras.applications.efficientnet_v2 import EfficientNetV2L as img_net

target_size = (480, 480)

from keras.applications.efficientnet_v2 import preprocess_input as pri

from keras.applications.efficientnet_v2 import decode_predictions as dep

def predit(imgs, imgs_list):

print('Прогноз:')

top = 2 # Число прогнозов для каждого изображения

k = -1

for x in imgs:

features = model.predict(x, verbose = 0) # features.shape: (1, 1000)

dp = dep(features, top = top)[0]

k += 1

print(imgs_list[k])

for p in dp:

print(p)

# Функция возвращает список загруженных и обработанных изображений

def load_imgs(pathToData, imgs_list):

imgs_loaded = []

imgs_for_show = []

for img in imgs_list:

img_path = pathToData + img

img = image.load_img(img_path, target_size = target_size)

x = image.img_to_array(img) # Массив формы (224, 224, 3)

imgs_for_show.append(x / 255)

# Добавляем измерение протяженностью 1

x = np.expand_dims(x, axis = 0) # Массив формы (1, 224, 224, 3)

# Подготовка данных для передачи на вход нейронной сети VGG16

x = pri(x)

imgs_loaded.append(x)

if show_img:

show_pics(imgs_list, imgs_for_show)

exit()

return imgs_loaded

# Загрузка изображений

imgs_loaded = load_imgs(pathToData, imgs_list)

model = img_net(weights = 'imagenet', include_top = True)

if show_summary:

model.summary() # Вывод сведений о слоях нейронной сети

# Прогнозирование

predit(imgs_loaded, imgs_list)

1. Исследование устойчивости сверточных нейросетей. https://habr.com/ru/companies/huawei/articles/509816/.

2. Арлазаров В. В., Лимонова Е. Е. Вопросы устойчивости искусственного интеллекта на основе нейронных сетей: теория и практика. (ФИЦ ИУ РАН) https://rutube.ru/video/3dd79744f919052709014475b8169bd2/.

3. ImageNet. https://image-net.org/index.php.

4. Understanding GoogLeNet Model – CNN Architecture. https://www.geeksforgeeks.org/understanding-googlenet-model-cnn-architecture/

5. AlexNet. https://en.wikipedia.org/wiki/AlexNet.

6. Карпенко А. П., Овчинников В. А. Как обмануть нейронную сеть? Синтез шума для уменьшения точности нейросетевой классификации изображений. Вестник МГТУ им. Н.Э. Баумана. Сер. Приборостроение, 2021, № 1 (134), с. 102–119. DOI: https://doi.org/10.18698/0236-3933-2021-1-102-119.

7. FA_Attack_on_AlexNet. https://github.com/Carco-git/FA_Attack_on_AlexNet.